Don't forget to star the repo if you find this useful

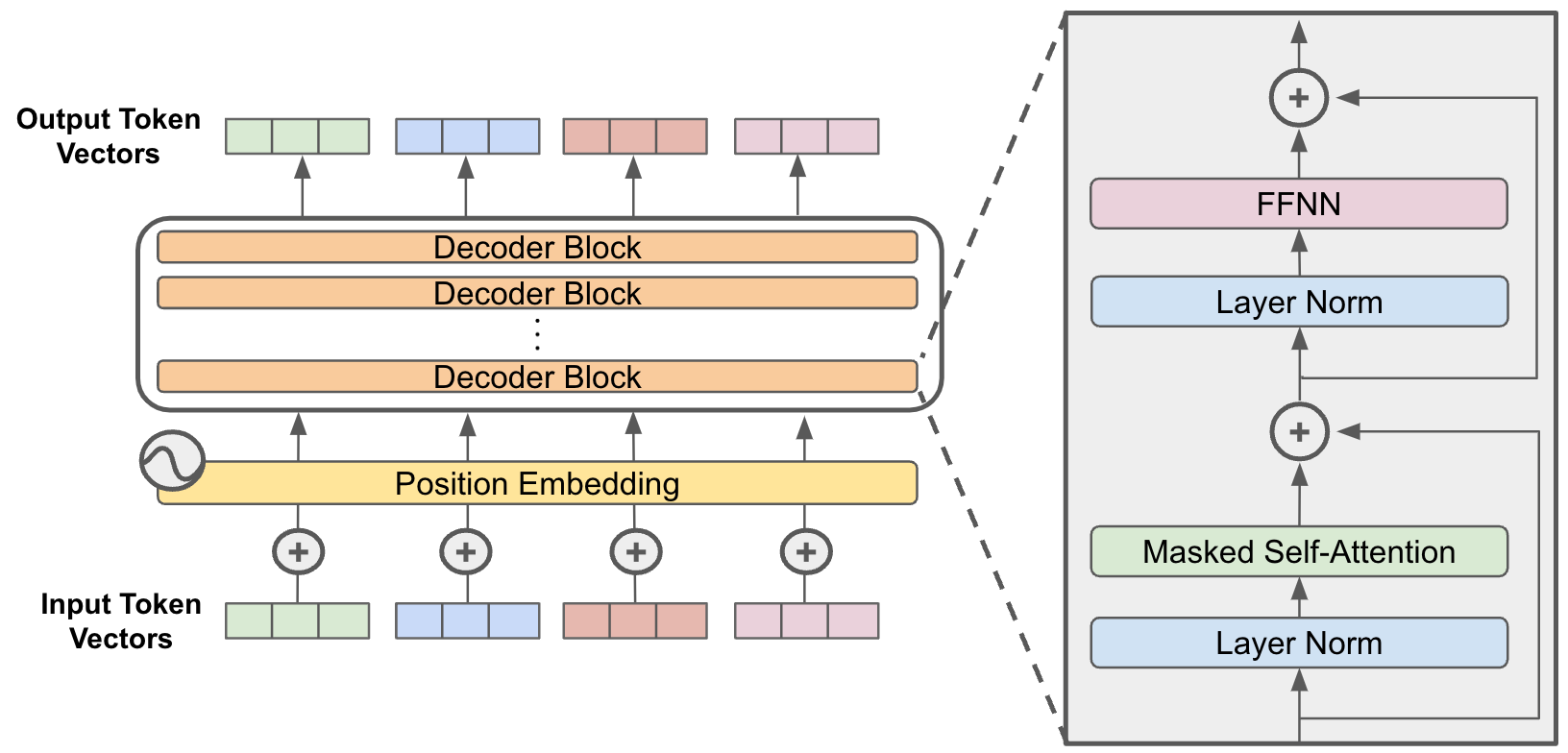

This repository features a custom-built decoder-only language model (LLM) with 8 decoders and a total of 37 million parameters. I developed this from scratch as a personal project to explore NLP techniques which you can now pretrain to fit whatever task

If you wish to run the code sequentially to see how everything works check out the Decoder-Only transformer notebook in the repo where I train the model to ask a question from a given context.

- Clone repository

git clone git@github.com:logic-ot/decoder-only-llm.git

cd decoder-only-llm

-

Configuration file

- Configure certain variables from the config.py file such as dataset path, etc.

Structure of config.py:

config = { "data_path":"sample_data.json", #path to dataset "tokenizer": AutoTokenizer.from_pretrained('bert-base-uncased'), "device": torch.device("cuda") if torch.cuda.is_available() else torch.device("cpu"), "model_weights": None, #path to model weights "learning_rate":0.0001, "num_batches":10, }

- Configure certain variables from the config.py file such as dataset path, etc.

-

Training

- After configuration, run the training script like so:

python training.pyThe model should automatically begin training

- After configuration, run the training script like so:

-

Data Structure

- Your data for training must be in the following format:

See an example in sample_data.json in the repo["conversation":[{"from":"human","value":"{some text}"},{"from":"gpt","value":"{some text}"}]

- Your data for training must be in the following format:

-

Inferencing

- The inference script takes in a text and the queries the model for a response. To inference, run:

python inference.py - The inference script takes in a text and the queries the model for a response. To inference, run:

- I have a what-to-expect.pdf file in the repo that details some observations i made while training the model for the question asking task. This information applies to any other model you decide to pretrain.

- Here is an excellent video that explains tranformers: 3 blue blue 1 brown